INDUSTRY 4.0 IS COMING

The sage words below are an excerpt from a Nov 1, 2017 Blog by Danny Bradbury - see https://sector.ca/sabotage-and-subterfuge-hacking-industrial-robots/. I include this entry today because I want to take a closer look at the promised connected world of the Industry 4.0 platform, so that we all remember to address the cyber security issues along the way to the promised land of true Manufacturing Automation.

--------------------------------

Isaac Asimov’s three laws of robotics are safe, sensible rules. First laid out in 1942, rule number one prevents a robot from harming a human being. The second forces it to obey orders given it by people, except where such orders would conflict with the first law. Finally, it must protect its own existence as long as such protection does not conflict with the First or Second Law.

Those are some pretty sound, sensible rules – and Stefano Zanero has persuaded industrial robots to break all of them.

Zanero, an associate professor at Italian university Politecnico di Milano, will explain how in his talk at SecTor later this month. He has found flaws in industrial robots and developed theoretical attacks that could dramatically affect corporate users, and worse.

“I was taking coffee with a colleague that works on robotics, and was looking at their labs. While we were talking, I was going through all the research that I had read in the last few years. I realized that I had not seen anybody look into one of these things,” he says. That’s not surprising. “Not everybody has an €80,000 robot sitting above their lab.”

Zanero did. Robotics is a key research area at the Politecnico di Milano, so he set to work investigating several robots’ underlying security protections. He found some common flaws.

“Most of the components in the robot were relatively weak. They were not designed to withstand hacking attacks,” he says.

In one of the robots he investigated, he found a default user that couldn’t be disabled, and a default password that couldn’t be changed. “When you compromise the first Internet-facing component, all the other components are basically also yours,” he explains. Those components all download the firmware from the first, compromised component without checking code signatures.

BREAKING LAW NUMBER TWO

In compromising this software, an attacker is able to violate Asimov’s second law by giving it new instructions that its original programmers didn’t intend.

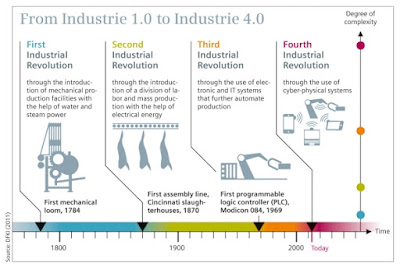

Industrial control systems built to this level of security are not meant to be Internet facing, he adds, and yet the move towards ‘Industry 4.0’ – an increasingly connected factory environment in which robots and other industrial systems are accessible via IoT-based networks – is increasingly putting them there. Many industrial robots today are a browser away from the Internet or in some cases directly connected, he warns.

What could he make a robot do with these vulnerabilities? He came up with several possibilities. The first was the introduction of micro-defects.

“If you get control of a robot, you can introduce in a subtle way a lot of micro-defects into the parts being manufactured. These defects would be too small to be perceived,” he says. “Since the robot isn’t designed with this attack model in mind, there is absolutely no way for the people programming the robot to realise that it has been put off centre and miscalibrated.”

A slight offset in a welding algorithm could produce a structural flaw that could have significant implications for product safety. Imagine a production line altered to produce unsafe automotive components. A year after the attack, the attacker could make the flaw known and force a product recall, costing the victim millions and trashing their brand. Worse still would be not making the flaw known, waiting instead until road accidents started happening.

GOODBYE, LAW NUMBER THREE

“The second big area of concern is that using the same manipulations, you can actually make a robot destroy itself,” says Zanero.

There goes Asimov’s third law, and with it, your factory’s profit. Production lines have a high downtime cost, running into thousands of dollars per minute. Robots are also custom-configured and difficult to source, making them difficult to replace.

This also raises the possibility of ransomware, says Zanero. An attacker could incapacitate a robot and then demand a ransom payment to set it going again. That would change the attacker’s business model from industrial sabotage to pure profit.

VIOLATING THE MOST IMPORTANT LAW OF ALL

Another possibility is that the robot could be programmed to violate the first law, harming a human directly. This would admittedly be difficult for an attacker to do. Robots working alongside humans are tightly monitored and designed not to make movements that could harm their coworkers. Nevertheless, there is scope for abuse, Zanero says.

“Even if the robot moves slowly and doesn’t really harm you by moving, if the point of the tool is toward you, it could harm you,” he says. Robots are programmed to keep pointy things away from people. “They are super good at that. There is a lot of safety around that, but it is software, not hardware,” he points out, adding that an attacker could change that software.

To its credit, the industrial robot vendor that Zanero’s team contacted about the flaws was responsive and quick to react. It thanked the team and patched the bug in its products, which is an encouraging sign. Nevertheless, there is more work for the robotics industry to do.

“We have tested one specific robot, and then we tested others just to see if our architectural considerations would generalize,” he says. “And they did.”